When normal is just a direction not a position then why position of mesh changes the normal map?

When Normal is just a direction, not a position then why positioning of the object in the scene or transformation (translation, rotation, and scale) of the object matters while baking object-space or world-space normal map?

As we know that Normal is a direction (and it is orthogonal to the face of the mesh). When we bake Normal-map as RGB image, we calculate its x, y, z values to represent a direction. To calculate x, y, z of a normal-direction, we need a reference point such as when we calculate a position of a dot on graph paper, (0,0) is the reference point.

Reference point we pick, may change the x, y, z value of a direction.

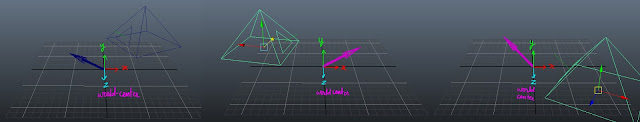

Eg : If we have a pyramid and we are calculating Normal of a face.

Object Space Normal Map :

To write Object-space normal value, we will find out x,y,z value from a center of the mesh.

x, y, z value of Normal direction will remain the same at any rotation of the mesh as when we rotate the mesh, center-of-mesh i.e. transformation-axis rotate as well.

World Space Normal Map :

To write World-space normal value, we will find out x,y,z value from the center of the world.

Center-of-world or transformation-axis of the world does not change based on the placement of the mesh that is why x, y, z value of the normal direction will change based on the positioning of the mesh.

As we know that Normal is a direction (and it is orthogonal to the face of the mesh). When we bake Normal-map as RGB image, we calculate its x, y, z values to represent a direction. To calculate x, y, z of a normal-direction, we need a reference point such as when we calculate a position of a dot on graph paper, (0,0) is the reference point.

Reference point we pick, may change the x, y, z value of a direction.

Eg : If we have a pyramid and we are calculating Normal of a face.

Object Space Normal Map :

To write Object-space normal value, we will find out x,y,z value from a center of the mesh.

x, y, z value of Normal direction will remain the same at any rotation of the mesh as when we rotate the mesh, center-of-mesh i.e. transformation-axis rotate as well.

World Space Normal Map :

To write World-space normal value, we will find out x,y,z value from the center of the world.

Center-of-world or transformation-axis of the world does not change based on the placement of the mesh that is why x, y, z value of the normal direction will change based on the positioning of the mesh.

Comments